Load Balancing on Kubernetes

Posted by nerdcoding on May 12, 2018

When an application is deployed on several Kubernetes pods such a pod is never accessed directly by the application clients. Normally a Service is used to group a set of pods and act as a single access point. Then incoming requests are load balanced between all pods. IP and port of a service are fix during its lifetime so the client does not need to care about the pods behind the service.

There are several of service types services for internal and for external client access. Unfortunately Kubernetes does not come with a service doing load balancing for external clients. The service type LoadBalancer only works when Kubernetes is used on a supported cloud provider (AWS, Google Kubernetes Engine etc.) and the underlying load balancing implementation of that provider is used. This blog post describes the different options we have doing load balancing with Kubernetes on a not supported cloud provider or on bare metal.

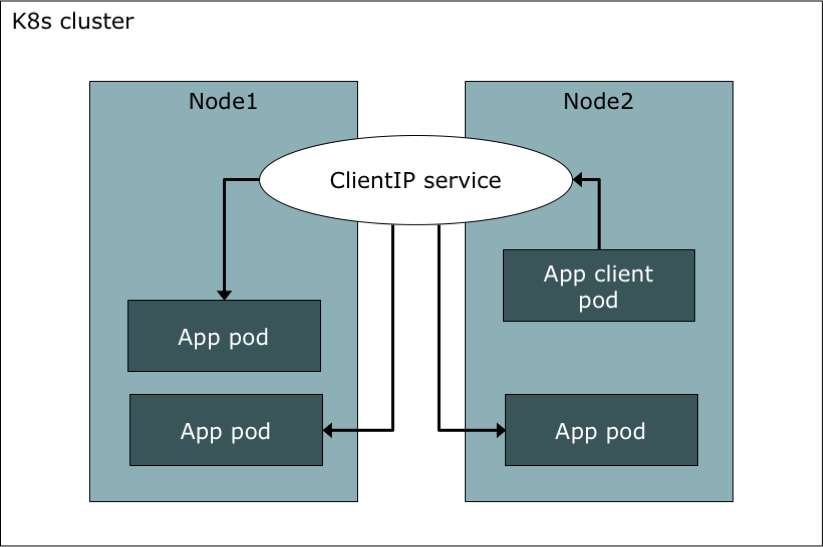

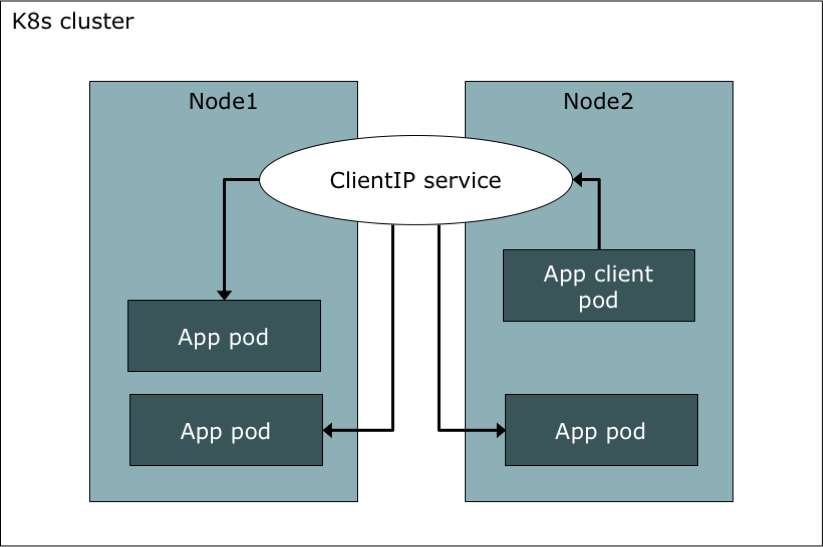

ClusterIP Service

ClusterIP is the default service type and could be used when load balancing is needed for pods running inside of the Kubernetes cluster. E.g. there is a frontend and a backend application deployed on the same Kubernetes cluster and the frontend needs to access the backend. A ClusterIP service group all pods of the backend application and the frontend can access the backend only using this service. Anymore the ClusterIP service will load balance all incoming requests between all backend pods.

We assume that there is a application deployed wit a Deployment controller.

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend-app-deployment

spec:

replicas: 3

selector:

matchLabels:

app: backend-app (2)

template:

metadata:

labels:

app: backend-app (1)

spec:

containers:

- name: backend-app

image: <docker-registry>/backend-app

ports:

- containerPort: 8080-

All pods running this application have a label

app: backend-app. -

The deployment is responsible for all pods with this label.

Now we define with the type ClusterIP:

apiVersion: v1

kind: Service

metadata:

name: clusterip

spec:

type: ClusterIP

ports:

- port: 8080

targetPort: 8080

selector:

app: backend-app (1)-

All pods with this label are grouped in the service.

To actually create the service:

$ kubectl apply -f clusterip-service.yml $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE clusterip ClusterIP 10.110.191.227 <none> 8080/TCP 5s

The IP 10.110.191.227 is internal and could only be reached from inside the cluster. Applications running inside the cluster can use this IP to access other application. When a pod selected by this service is created after the service the pod gets some environment variables so that the Cluster-IP could be looked up.

We can delete all pods, and the deployment controller will immediately create new pods. Then we could see environment variables containing the Cluster-IP and port of the service;

$ kubectl delete pod --all $ kubectl get pod NAME READY STATUS RESTARTS AGE backend-app-549cb98855-crxh7 1/1 Running 0 16s backend-app-549cb98855-h7rwv 1/1 Running 0 16s backend-app-549cb98855-kcg5z 1/1 Running 0 16s $ kubectl exec backend-app-549cb98855-kcg5z -- env CLUSTERIP_SERVICE_HOST=10.110.191.227 CLUSTERIP_SERVICE_PORT=8080 [...]

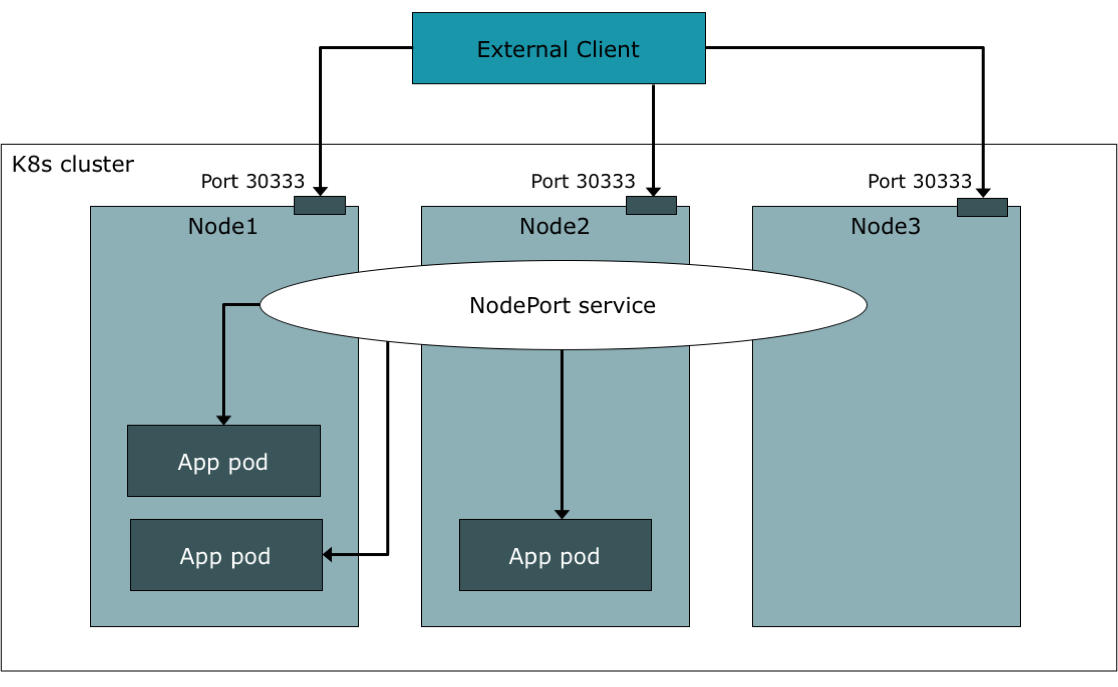

NodePort service

Services of the type ClusterIP are fine, when pods only need to be accessed from within the cluster. One way to make a service accessible to external clients is to use the type NodePort. Each node of our Kubernetes cluster has an IP address accessible to all hosts in the network from outside the cluster. With this service type, a port is opened on each cluster node and incoming requests are going to the NodePort service. Again the service will load balance all incoming requests between all pods. When a request is made to one specific node, the request could be forwarded to a pod on the same node or to a pod on another node.

As we created the ClusterIP Service we assumed, that there is a application [deployment]. Again we use this deployment for our service.

apiVersion: v1

kind: Service

metadata:

name: backend-app-nodeport

spec:

type: NodePort

ports:

- port: 8080

targetPort: 8080

nodePort: 30333

selector:

app: backend-app$ kubectl apply -f nodeport-service.yml $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE backend-app-nodeport NodePort 10.96.3.123 <none> 8080:30333/TCP 4s

Now there is a service with the type NodePort which opened a port 30333. Supposed there are three nodes with the network IP addresses 192.168.1.21, 192.168.1.22 and 192.168.1.23 we can access the backend application

at each node:

curl 192.168.1.21:30333 curl 192.168.1.22:30333 curl 192.168.1.23:30333

The disadvantage of this scenario is, that external clients need to know the IP addresses of one or all nodes. If a extern client only access one node, it will fail when the node fails, and if it access all nodes the client need to do a load balancing between all nodes by itself. Frequently there is a external load balancer (e.g. nginx) which balances client requests between all nodes. Thus a external load balancer (nginx) before the internal load balancer (NodePort service) is needed.

Services of the type LoadBalancer are actually an extension of NodePort services. As mentioned in the beginning, this service type only works on cloud platforms. The platform provider automatically creates an external load balancer before the Kubernetes cluster.

MetalLB for load balancing ot external clients

When Kuberntes is installed on bare metal LoadBalancer services could not be used. We have the possibility to create a NodePort service and install and configure our own load balancer which balances between all cluster nodes. Fortunately MetalLB fills the missing gap and brings a load balancer implementation for bare metal Kubernetes clusters.

All that we need are one unused IP address in our network, which could be used by MetalLB to assign this IP to the load balancer. At first we need to install MetalLB:

kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.6.2/manifests/metallb.yaml

This creates all the basic infrastructure in the namespace metallb-system needed by MetalLB to create the load balancer service. In the second step we create a ConfiMap to configure which unassigned network IP address should be used for the load balancer. Here I will use the IP address 192.168.1.30:

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.1.30And apply this config with:

$ kubectl apply -f metallb-config.yml

Now we can create a new service with the type LoadBalancer which will be run successful on a bare metal Kubernetes cluster:

apiVersion: v1

kind: Service

metadata:

name: metallb

spec:

ports:

- port: 8080

targetPort: 8080

selector:

app: backend-app

type: LoadBalancer

loadBalancerIP: 192.168.1.30$ kubectl apply -f metallb-service.yml $ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE metallb LoadBalancer 10.107.135.255 192.168.1.30 8080:30942/TCP 42s

With curl 192.168.1.30:8080 the backend application is now accessible by external clients and all incoming requests are load balanced between all pods containing the label app: backend-app.